@Mathijs hi matthijs, im trying to do the hand eye calibration, but my transformed coord seems wrong. any clue on what i did wrong?

Please use code blocks for code instead of screenshots. You can insert a codeblock:

# Put your code between three tildes: ```

# Your code here

# finish with three tildes: ```

import cv2

import numpy as np

hand_coords = ...

My guess is that you used pixel coordinates as eye_coords, but the units need to be the same:

You must have a set of corresponding points in both systems to use the function. These points should be in the same units (e.g., millimeters or meters). You also need at least 4 points to get an accurate result. The more points you have, the more accurate the result will be.

What usually helps is to start calibrating (using Aruco markers / Charuco board) to get an accurate pixel to mm conversion. Once that is correct (verify by measuring known objects in your image) you can move on with the hand/eye calibration.

It looks like you are using invalid points.

The calibrateHandEye() function determines the transformation matrix between a hand and its eye. The transformation matrix is a 3×4 matrix representing the relationship between the two systems in translation and rotation. In the context of a robotic hand, the hand coordinates are in the hand’s coordinate system, and the eye coordinates are in the eye’s coordinate system. The calibrateHandEye() function takes two arrays as input: one representing the hand coordinates and the other representing the eye coordinates. It then returns the transformation matrix that maps one system to the other.

You must have a set of corresponding points in both systems to use the function. These points should be in the same units (e.g., millimeters or meters). You also need at least 4 points to get an accurate result. The more points you have, the more accurate the result will be.

So the function generates the homogenous transformation matrix from your hand to your camera.

It is how you can translate something you find in your camera to a position in relation to your hand.

You need a list of atleast four examples with the position of the same thing/object, in relation to your camera AND in relation to your hand.

Single example value: If you can SEE an object IN your hand, the correct value for that example are:

Gripperpoint: [0,0,0]

Camerapoint: [x, y, z]

Another single example point: If something is 10cm in front of your gripper and you KNOW it is on a table, your camera is parallel and 30cm directly above your object:

Gripperpoint: [0,10,0]

Camerapoint: [0,0,30]

So to get a good list of corresponding points, a series of steps could be:

- Put an object in your gripper

- place it somewhere

- Remember exactly how you move your robot away from the object

- Write down by what [xyz] you moved the robot

- Detect the object with your camera

- Write down the [xyz] in camera view

@GuusParis alright, i got the transformation matrix, then i have to multiply a test coordinate with the T to get robot coordinate right?

testCoord = np.array([[40.0, 40.0, 30.0]

translatedCoord = T @ testCoord

But the result is way off.

appreciate your answer

Multiplying the transformation matrix with your detected eye coordinate should give you your hand coordinate.

- Can you show me how you got the points to generate your transformation matrix?

- Can you share the picture from your camera from which you get [40,40,40]

- Can you share picture of your setup

@GuusParis this is not how the setup was actually look like, but i think you will got the point. middle bottle is test coord, [40,40,30]

import cv2

import numpy as np

hand_coords = np.array([[385.65, -305.66, -135.7], [383.83, -657.7, -135.7], [

77.6,-741.0, -135.7], [134.21, -330.0, -135.7]])

eye_coords = np.array([[31.48, 22.67, 26.2], [366.48, 34.00, 26.2],

[365.22, 274.54, 26.2], [40.30, 264.47, 26.2]])

# rotation matrix between the target and camera

R_target2cam = np.array([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0], [

0.0, 0.0, 1.0], [0.0, 0.0, 0.0]])

# translation vector between the target and camera

t_target2cam = np.array([0.0, 0.0, 0.0, 0.0])

# transformation matrix

T, _ = cv2.calibrateHandEye(hand_coords, eye_coords,

R_target2cam, t_target2cam)

# print(T)

testCoord = np.array([31.48, 22.67, 26.2]) #my idea is to test the coordinate which used to calibrate it, so this will return 385.65, -305.66

translated = T @ testCoord

print(translated)

the other test coord (40,40,30) obtained from detected point (x and y) with z manually measured

I have the same problem, my transformation matrix returns the wrong coordinates

hand_coords = np.array([[-404.57, -130.84, -328.98], [-464.52, -408.47, -328.98], [

-323.90, -297.08, -328.98], [-477.24, -223.86, -328.98]])

eye_coords = np.array([[449.26, 260.65, 0.0], [195.16, 212.51, 0.0],

[320.90, 336.62, 0.0], [374.93, 184.35, 0.0]])

# rotation matrix between the target and camera

R_target2cam = np.array([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0], [

0.0, 0.0, 1.0], [0.0, 0.0, 0.0]])

# translation vector between the target and camera

t_target2cam = np.array([0.0, 0.0, 0.0, 0.0])

# transformation matrix

T, _ = cv2.calibrateHandEye(hand_coords, eye_coords,

R_target2cam, t_target2cam)

print(T)

[[ 0.89482581 -0.03126387 -0.44531937]

[-0.41475196 0.31076904 -0.85522127]

[ 0.165129 0.94997115 0.2651174 ]]

test = np.array([-404.57, -130.84, -328.98])

transformed_coords = T @ test

[-211.42794591 408.4858717 -278.31878702]

But that isnt correct, it should be: [449.26, 260.65, 0.0]

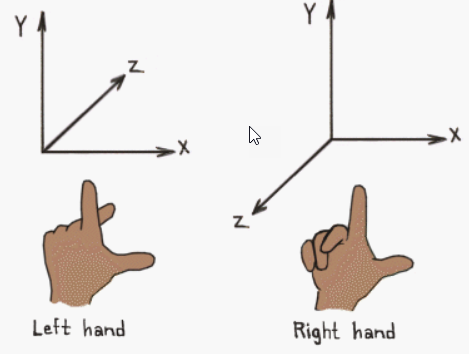

@GuusParis Is it a problem when the x-axis of the robot is not in the same direction as the x-axis of the camera?

Not necessarily. But the frames should also both follow the same coordinate system. So they should both follow the right-hand rule.

@GuusParis Im not sure i understand?

What do you mean with right hand rule

In almost all cases, the right-hand coordinate system is used.